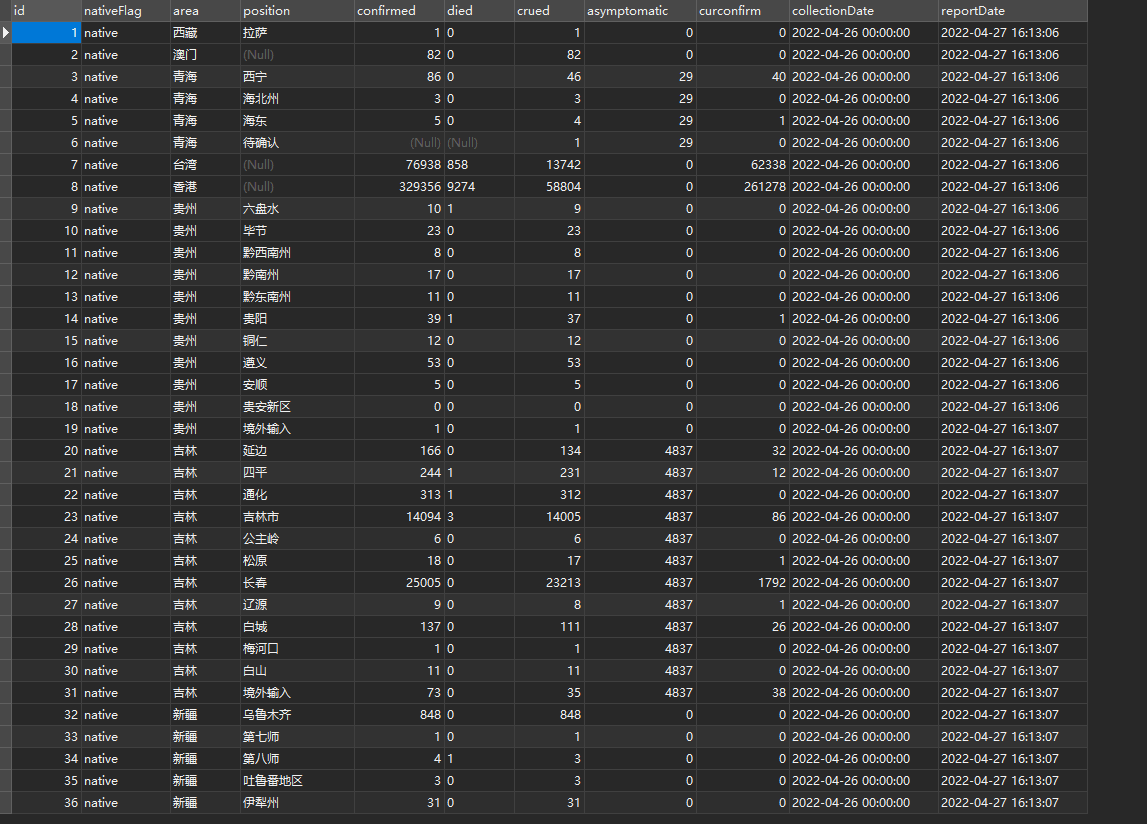

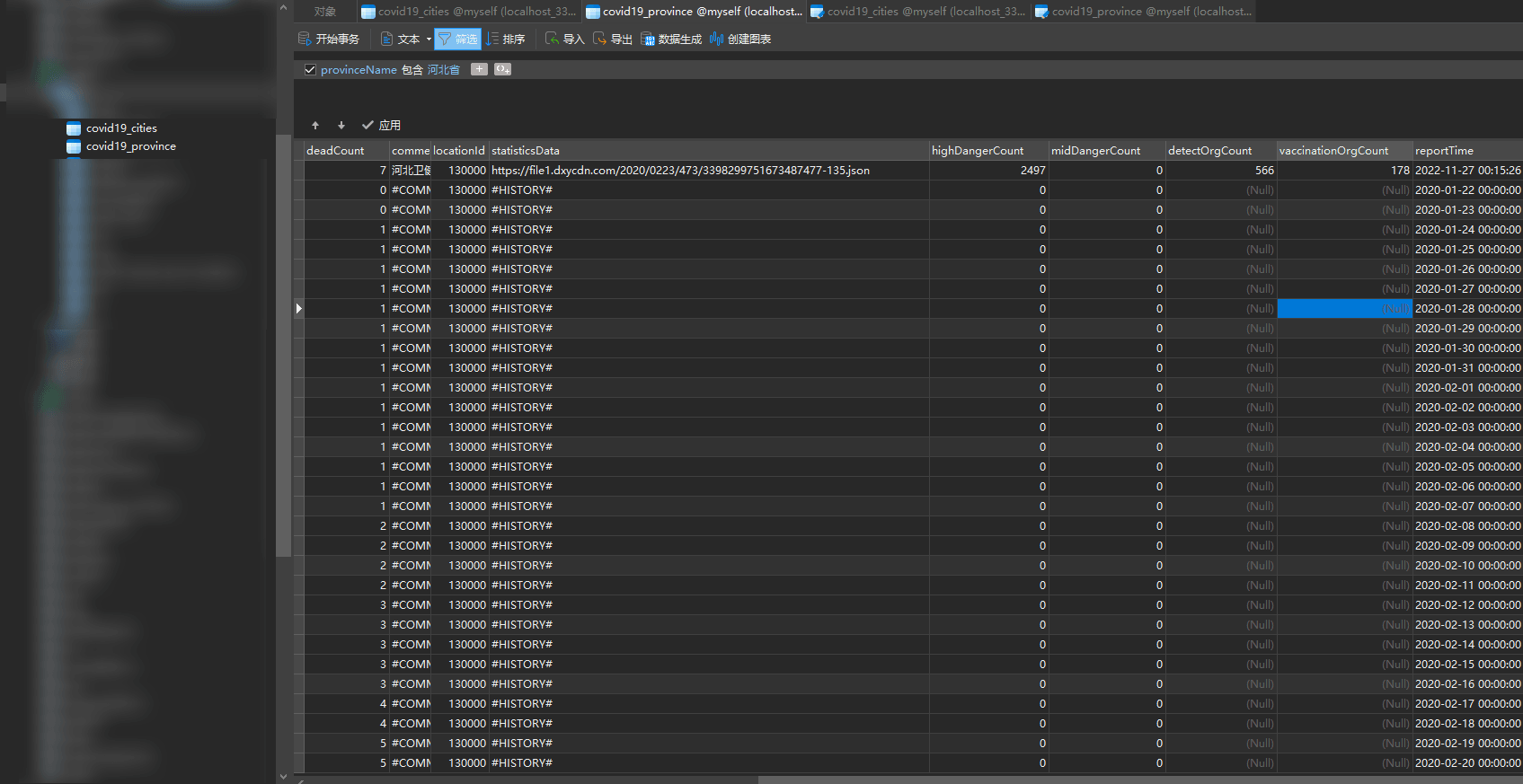

Loading... # 引言 今天看了看爬了半年多的疫情数据,只怪当时做的时候,没有认真的检查字段匹配,但是说什么也都晚了,也许当时百度的那个接口还是没有搞太明白吧。  # 推荐 以为大佬的丁香数据,每日pull,如果有需要的可以去这儿下载,就别花冤枉钱了:https://github.com/BlankerL/DXY-COVID-19-Data/releases # 代码 其实代码网上已经有很多了,当时也没多想,就着手搞了,但是也不算白费吧,就当练代码了 ```python import requests import json import pymysql import datetime global connection connection = None knl_url = 'https://ncov.dxy.cn/ncovh5/view/pneumonia' start_trait = 'window.getAreaStat = ' user = 'work' password = 'root' host = '127.0.0.1' port = 8306 database = 'covid' province_column = [] def get_connection(): global connection if connection is None: connection = pymysql.Connection(host=host, port=port, database=database, user=user, password=password) else: connection.ping(reconnect=True) return connection def get_content(): get = requests.get(knl_url) get.encoding = 'utf-8' grob = get.text start_index = grob.find(start_trait) end_index = grob.find('</script>', start_index) return grob[start_index + len(start_trait):end_index - len('}catch(e){}')] def deal(data): province_datas = json.loads(data) # create_table_prepare(province_datas[0], database, 'covid19_province') # create_table_prepare(province_datas[5]['cities'][0], database, 'covid19_cities') for item in province_datas: insert(item, 'covid19_province') if 'cities' in item: if len(item['cities']) > 0: insert(item['cities'], 'covid19_cities') def insert(datas: dict, table: str): k = ['reportTime'] v = [datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')] if type(datas) == dict: for key in datas.keys(): if key in ['cities', 'dangerAreas']: continue k.append(f'''`{key}`''') v.append(str(datas[key])) sql = f'''INSERT INTO {database}.{table}({','.join(k)}) VALUES("{'","'.join(v)}")''' print('插入数据', table) get_connection().cursor().execute(sql) get_connection().commit() else: for item in datas: insert(item, table) def create_table_prepare(json_data: dict, database_name: str, table_name: str): create_table_sql = f'''CREATE TABLE {database_name}.{table_name}( id int auto_increment primary key unique comment "自增ID" , reportTime datetime , ''' for key in json_data.keys(): column = key col_type = 'varchar(128)' if type(json_data[key]) == int: col_type = 'int' elif type(json_data[key]) == list: continue create_table_sql += f''' `{column}` {col_type} ,''' connection = get_connection() create_table_sql = create_table_sql[0:-1] + ")" connection.cursor().execute(create_table_sql) def get_history_data(): c_sql = "select COLUMN_NAME from information_schema.columns where table_schema = 'myself' and table_name = 'covid19_province'" cursor = get_connection().cursor(cursor=pymysql.cursors.DictCursor) cursor.execute(c_sql) l = cursor.fetchall() for i in l: province_column.append(i['COLUMN_NAME']) d_sql = f"""select provinceName,provinceShortName,`comment`,locationID,statisticsData from {database}.covid19_province group by provinceName """ cursor = get_connection().cursor(cursor=pymysql.cursors.DictCursor) cursor.execute(d_sql) dlist = cursor.fetchall() for ditem in dlist: headers = { 'Access-Control-Allow-Headers': '*', 'Access-Control-Allow-Methods': 'POST, GET, OPTIONS, PUT, DELETE', 'Access-Control-Allow-Origin': '*', 'Access-Control-Max-Age': '0', 'Age': '4', 'Ali-Swift-Global-Savetime': '1669476601', 'Connection': 'keep-alive', 'Content-Disposition': 'attachment; filename="area_trend_chart_data10.json"', 'Content-Encoding': 'gzip', 'Content-Length': '32773', 'Content-MD5': 'FhSR1XMWHw75g2NBqd9ctg==', 'Content-Type': 'application/json', 'Date': 'Sat, 26 Nov 2022 15:30:01 GMT', 'EagleId': 'db939d1716694766051615175e', 'Last-Modified': 'Fri, 25 Nov 2022 23:00:04 GMT', 'Server': 'Tengine', 'Timing-Allow-Origin': '*', 'Vary': 'Accept-Encoding', 'Vary': 'Origin', 'Via': 'cache5.l2cn2178[0,0,200-0,H], cache79.l2cn2178[1,0], kunlun6.cn547[0,0,200-0,H], kunlun3.cn547[2,0]', 'X-Cache': 'HIT TCP_MEM_HIT dirn:11:650126168', 'x-oss-cdn-auth': 'success', 'x-oss-hash-crc64ecma': '9057424919198441654', 'x-oss-object-type': 'Normal', 'x-oss-request-id': '638230F9292C0631361073AB', 'x-oss-server-time': '2', 'x-oss-storage-class': 'Standard', 'X-Swift-CacheTime': '604799', 'X-Swift-SaveTime': 'Sat, 26 Nov 2022 15:30:02 GMT', } json = requests.get(ditem['statisticsData']).json()['data'] for item in json: k = ['reportTime', 'provinceName', 'provinceShortName', 'comment', 'locationID', 'statisticsData'] v = ['1970-01-01 00:00:00', ditem['provinceName'], ditem['provinceShortName'], '#COMMENT#', str(ditem['locationID']), '#HISTORY#'] for key in item: if key in province_column: k.append(f'''`{key}`''') v.append(str(item[key])) elif key == 'dateId': item[key] = str(item[key]) v[0] = f"""{item[key][0:4]}-{item[key][4:6]}-{item[key][6:8]} 00:00:00""" sql = f'''INSERT INTO {database}.covid19_province({','.join(k)}) VALUES("{'","'.join(v)}")''' get_connection().cursor().execute(sql) get_connection().commit() print('录入历史数据', ditem['provinceName']) deal(get_content()) # get_history_data() ``` # 结语  简单的分析一下,省级的可以找到历史数据,市级的只有当日的。 © 允许规范转载 打赏 赞赏作者 支付宝微信 赞 如果觉得我的文章对你有用,请随意赞赏